Shantha Kumar T

Introduction to Voice authentication using JavaScript

Authentication is the process of validating the user before allowing them to access the secured data. Voice recognition is also one of the authentication factors among different types.

I would like to show you, how to validate the different audio messages and determine if there are from the same user/speaker.

First, generate the HTML by creating two audio inputs and two starts and stop buttons for each audio component. Then add the compare button to validate the authentication.

<div class="container">

<h1>Validate Speaker</h1>

<div class="header">Speaker 1</div>

<div class="audio">

<audio id="audio1" controls></audio>

<div>

<button id="btnstartaudio1" onclick="startRecording('audio1')">Start</button>

<button id="btnstopaudio1" onclick="stopRecording('audio1')" style="display: none;">Stop</button>

</div>

</div>

<div class="header">Speaker 2</div>

<div class="audio">

<audio id="audio2" controls></audio>

<div>

<button id="btnstartaudio2" onclick="startRecording('audio2')">Start</button>

<button id="btnstopaudio2" onclick="stopRecording('audio2')" style="display: none;">Stop</button>

</div>

</div>

<button id="btncompare" onclick="compare()">Compare</button>

<div id="output"></div>

</div>

Once the HTML is ready, we must capture two voices from the speaker. To do that, use the HTML5 Web Audio API and recorder.js. These two will be used to record the voices of the speaker. Recorder.js helps to convert to Wav format and use the FileReader.readAsDataURL to make the output as base64 format. Because the REST API requires in this format.

var audio_context;

var recorder;

function startAudioRecorder(stream) {

var input = audio_context.createMediaStreamSource(stream);

recorder = new Recorder(input);

}

window.onload = function init() {

try {

window.AudioContext = window.AudioContext || window.webkitAudioContext;

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia;

window.URL = window.URL || window.webkitURL;

audio_context = new AudioContext;

} catch (e) {

alert("Web Audio API is not supported in this browser");

}

navigator.getUserMedia({ audio: true }, startAudioRecorder, function (e) {

alert("No live audio input in this browser");

});

}

Add the two buttons for each audio capturing in the HTML page to record and stop the voices.

function startRecording(ele) {

recorder.clear();

recorder.record();

document.getElementById('btnstop' + ele).style = "display: inline-block;";

document.getElementById('btnstart' + ele).style = "display: none;";

}

function stopRecording(ele) {

recorder.stop();

createDownloadLink(ele);

document.getElementById('btnstop' + ele).style = "display: none;";

document.getElementById('btnstart' + ele).style = "display: inline-block;";

}

The user / Speaker must click on the Start button to start the recording and click the stop button to stop the recording. The stop converts the retrieved audio input and stores that as an src link in the audio element.

function createDownloadLink(ele) {

var player = document.getElementById(ele);

recorder && recorder.exportWAV(function (blob) {

var filereader = new FileReader();

filereader.addEventListener("load", function () {

player.src = filereader.result;

}, false);

filereader.readAsDataURL(blob);

});

}

Once the voices are captured and converted, we should call the REST API from microsoft/unispeech-speaker-verification from Huggingface space. This REST API is built using microsoft/unispeech-sat-large-sv model and this follows the UniSpeech-SAT and x-vector paper.

function compare() {

fetch('https://hf.space/gradioiframe/microsoft/unispeech-speaker-verification/api/predict', {

method: 'POST',

body: JSON.stringify({

"data": [

{ "data": document.getElementById('audio1').src, "name": "audio1.wav" },

{ "data": document.getElementById('audio2').src, "name": "audio2.wav" }

]

}), headers: {

'Content-Type': 'application/json'

}

}).then(function (response) {

return response.json();

}).then(function (data) {

if (data != null && data.data.length > 0) {

if (data.data[0] != null) {

document.getElementById("output").innerHTML = data.data[0];

}

}

});

}

This API helps to validate the two audio messages and returns the output in a string message. The compare method calls that API and then validates it and updates the retrieved response date in the output element.

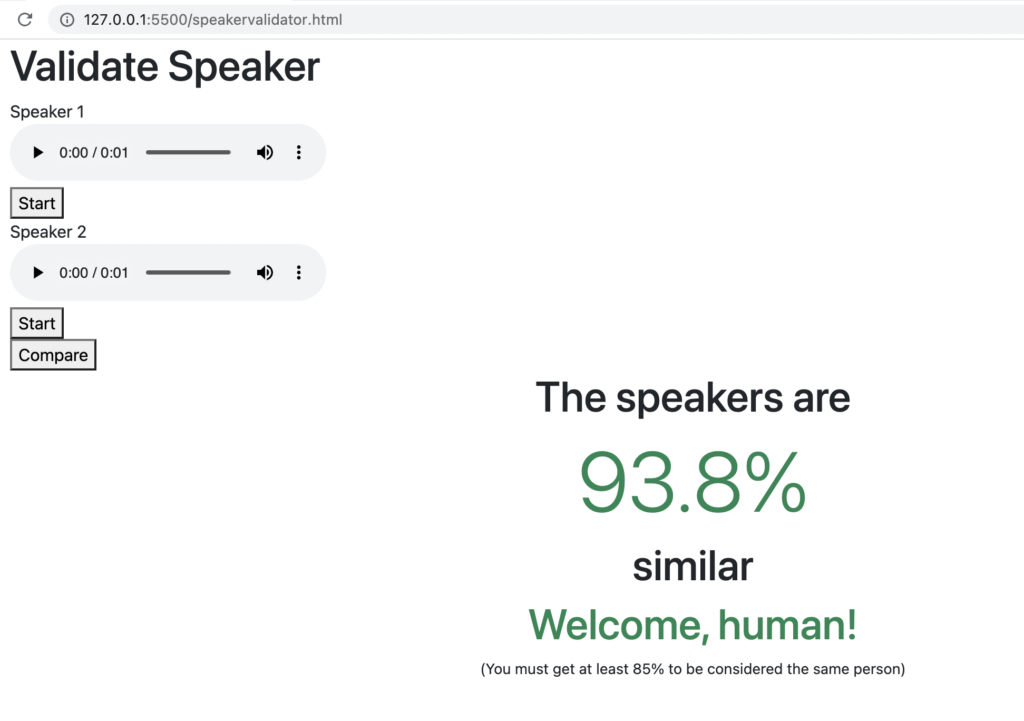

If the comparison validates the voices and returns the predicted score in %. If the predicted score is more than 85%, the two audio messages are from the same speaker.

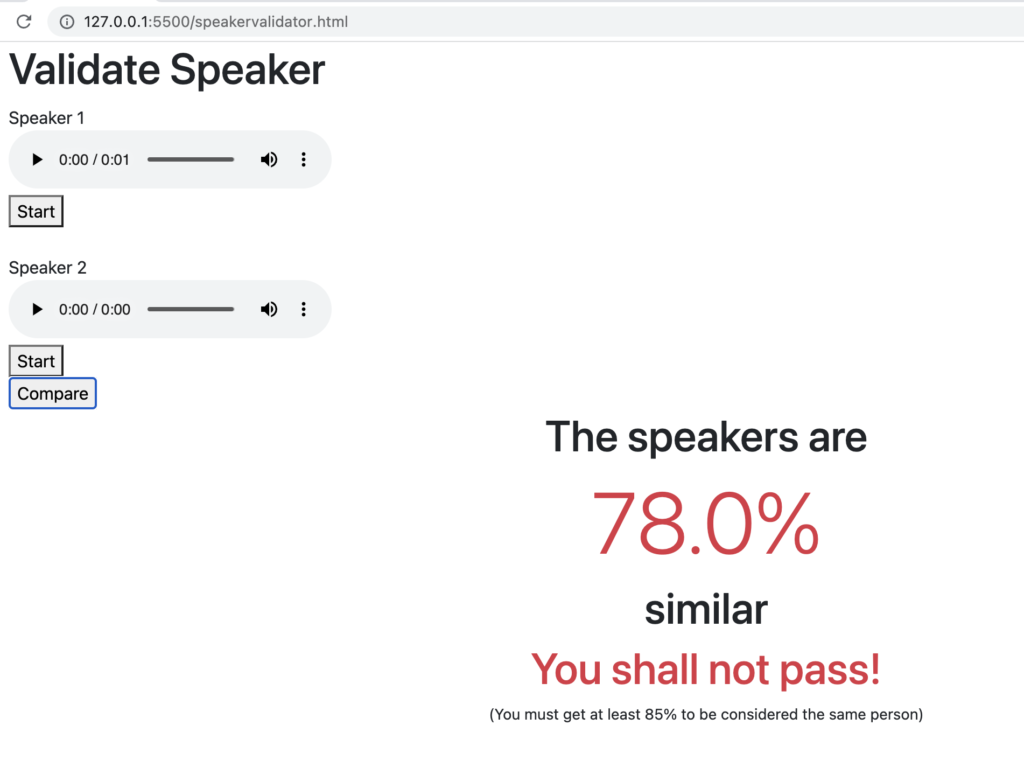

If it is less than that, then the validation failed and returns the failed message.

To implement this as an authentication, store the voice in a secure place. The login screen captures the audio from the user and validates it with the stored voice. Based on the output, process the next step.

We can use the same API for Voice recognition and authentication. Or we can create our own API by using the microsoft/unispeech-sat-large-sv model DataModel. Anyway, this helps to simplify voice authentication.

voice not recording

Not having any luck getting any playback on the recorded audio – I can see that it loads a data URL into the audio src element, but it seems to have a 0:00 second length. No errors are thrown. I have given the mic audio permissions and verified it is the correct input device.

The audio src looks like `data:audio/wav;base64,UklGRiQAAABXQVZFZm10IBAAAAABAAIAgLsAAADuAgAEABAAZGF0YQAAAAA=`

which doesn’t seem right.

Hello, I think the API stopped working. It only uses the stored recordings and not newly recorded recording. Can you confirm this? Also, is there another API source? Thank you

[Deprecation] The ScriptProcessorNode is deprecated. Use AudioWorkletNode instead.